Trends We are Watching in 2026

Trends We are Watching in the Survey Industry in 2026

The new year is always a good time to assess how emerging trends from the past year will affect the year ahead, whether those trends are in foreign policy, food, or even weddings. Of course, at Survey 160 we are focused on trends in the public opinion research world. Here’s a few trends we are following closely and believe will shape survey research in 2026.

Research We Read in 2025 And Just Can’t Stop Thinking About

Happy New Year! Before we start 2026 in earnest, we wanted to share with you some research from the year that just passed that we still cannot stop thinking about.

LLMs and Survey Research

Survey 160 Tracking Poll, November 2025 Update

Today we are releasing the results from our ongoing Survey 160 tracking poll conducted November 6-10, 2025. This installment zeroes in on a hot question: which political party do voters think is more corrupt? And it includes results from an experiment testing whether telling voters of a recent corruption allegation involving the Trump administration changes that view. Alongside the experiment, we continue to track public opinion on key political issues and trends, including presidential approval and the generic House ballot.

Best Practices for Text Message Surveys

Today we are releasing the first set of results from a pilot of a new project of Survey 160: a recurring tracking poll. These polls will track presidential approval and generic house ballot, among other topics to be discussed in upcoming releases. These surveys will also serve as a test-bench for our ongoing methodological research program.

Introducing the Survey 160 Tracking Poll

Today we are releasing the first set of results from a pilot of a new project of Survey 160: a recurring tracking poll. These polls will track presidential approval and generic house ballot, among other topics to be discussed in upcoming releases. These surveys will also serve as a test-bench for our ongoing methodological research program.

The Limits of Simulation in Public Opinion Research

Can computer programs supplant public opinion data collection? That is the promise of “synthetic respondents” or “silicon samples.” We are not so sure.

The dream of replacing human survey respondents with computer generated ones is far from new. As historian Jill Lepore documents in her book If/Then, in the 1960s the Simulmatics corporation sold predictions about human behavior and opinion from its “People Machine” computer simulation to political campaigners, journalists, consumer marketers, and even the Pentagon. Ultimately, that corporation failed when it could not deliver what it sold. While new technology is very different from that era, the central if unfulfilled promises – that you can extrapolate from previously-collected data to reliably predict future opinion or behavior not present in the training data set, avoiding having to go and talk to real people – continues to the present in the idea of “synthetic respondents” generated by Large Language Models (LLMs).

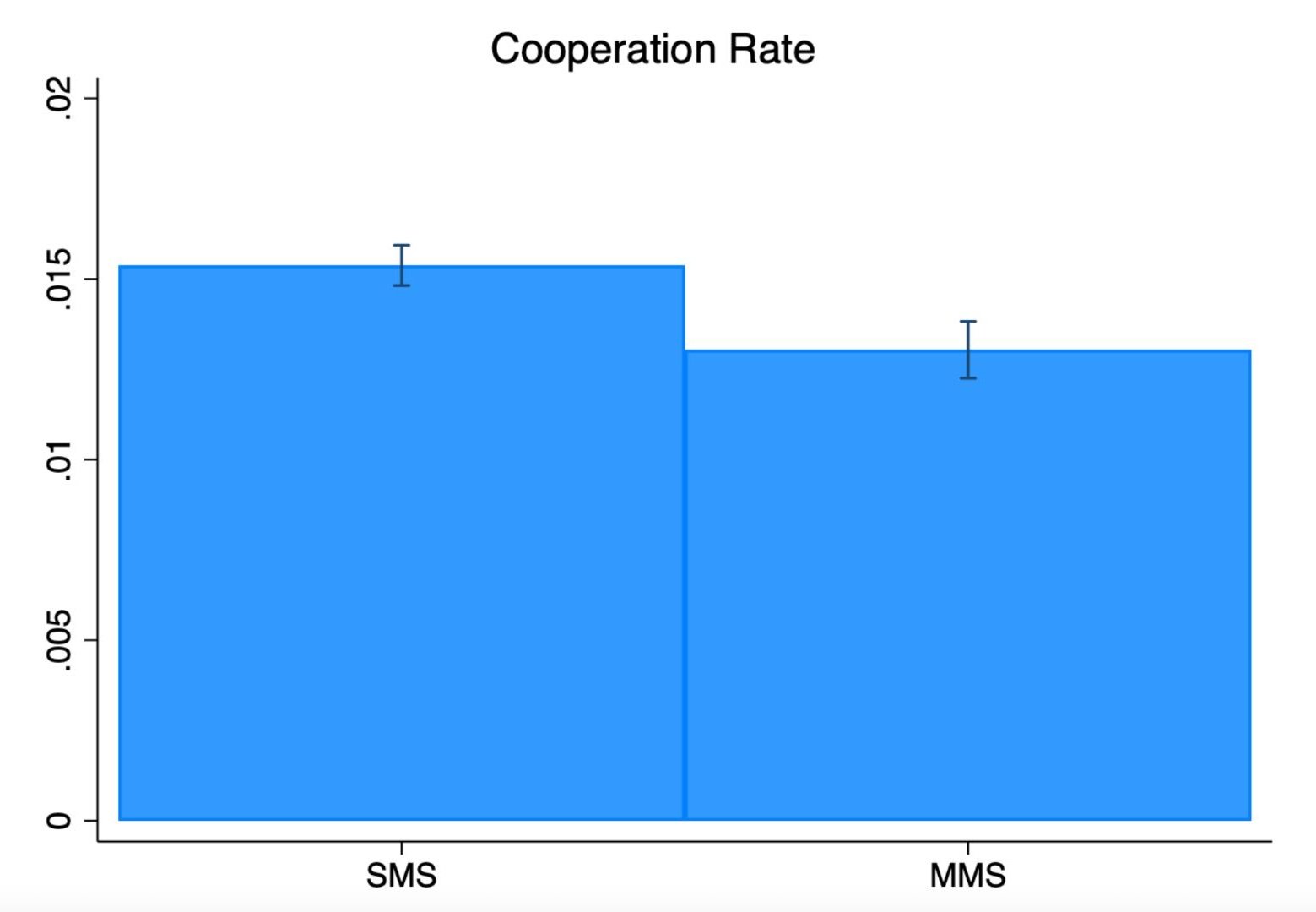

When not to use MMS for surveys

An increasingly common way to use text messaging for surveys is not SMS (short message service) but MMS (multimedia message service). Some believe that MMS allows survey firms to share an image like a logo to increase their credibility. Others are attracted to the longer number of characters within a single MMS segment, despite generally higher costs per segment (though, that may be a highlight for other firms selling MMS services). And others may use MMS out of a misguided belief that it increases deliverability rates; several years ago MMS could be sent via lines not registered with the carriers’ 10DLC system, though that no longer is true.

Comparing and Combining Text-to-Web and Panel-to-Web Surveys (Part 2)

In our last blog post, we described an experiment we conducted in 2024 and presented at AAPOR this past May to compare (and test strategies for combining) text-to-web and panel-to-web data. We found that the matched panel product we used was less expensive than text-to-web, but struggled to hit target numbers of completed interviews in two of the three mid-sized states included in the study. Panel respondents were more professional, meaning both they answered much more quickly but also passed attention checks at somewhat higher rates, and reported taking many more other surveys on average. But panel respondents were also less likely to report having a college degree and were less politically engaged.

Comparing and Combining Text-to-Web and Panel-to-Web Surveys (Part 1)

Last week at the annual meeting of the American Association for Public Opinion Research, we presented on internal research conducted just before the November 2024 election. Despite being focused on using text messages to field surveys, the vast majority of research we do for clients is part of mixed mode projects, which is why we have been engaged in a multi-year research agenda to identify best practices for combining data across modes. For example, at last year’s AAPOR conference we presented research on comparing and combining text messages with phone surveys conducted in 2023.

How long should we stay in the field? (Part II)

Today we return with part II of How long should we stay in the field (Part II), looking at how long to leave text to web surveys open.

In an experiment in the lead up to the 2023 Kentucky Governor election, we fielded an experiment randomizing a voter-file-sampled list to either receive text messages (or for landlines, IVR calls) or live interviewer calls. We wrote about some other findings from this survey previously. In the first round we let the initial text messages last a couple of days before sending a follow-up to non-responders.

How long should we stay in the field? (Part I)

Today we want to share some research, presented at last year’s AAPOR conference, on when to stop fielding.

The persistence and asynchronous nature of text message communication makes this a compound question: how long should we leave open the online component for a text-to-web survey, and should we send follow-up requests for participation among non-responders?

Improving Survey Performance with a Better Texting Interface

Our software is custom- and purpose-built to conduct surveys. We are always on the lookout for software improvements that can help us better serve our sole mission of conducting surveys efficiently and accurately. Over the past several months, we have overhauled several aspects of our application to increase throughput and interviewer speed so as to be able to reduce costs for our clients, whether they are doing election polls, media measurement, or customer feedback surveys.

Timing your Texting

The Public’s Concerns about AI (and “Probability of Doom”)

What people really think of artificial intelligence

Survey Mode and Polling Accuracy

Can Text Message Surveys Produce Accurate Results?

How to tell if the survey text message you’re getting is real

Using texting with other modes to optimize for cost and accuracy